Particle Merging¶

Be careful when using this module and read carefully the documentation.

Note that the nomenclature might change

The ability to merge macro-particles can speed-up the code efficiency and reduce the memory footprint in some specific simulation scenarii:

When macro-particles accumulate in a fraction of the simulation domain hence strongly worsening the load imbalance (ex: Weibel collision shocks, laser wakefield electron acceleration).

When macro-particles are generated in a large quantity due to some additional physical mechanisms (ionization, macro-photon emission, QED pair production…)

When macro-particles travel in large quantities outside interesting physical regions.

Smilei’s merging method is inspired from that of M. Vranic [Vranic2005] with some additions.

Please refer to that doc for the definitions in the namelist file.

Understand the method¶

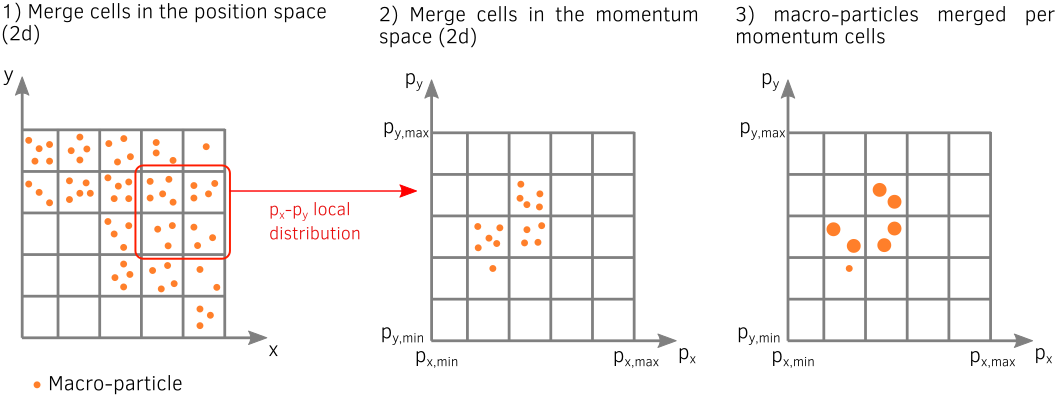

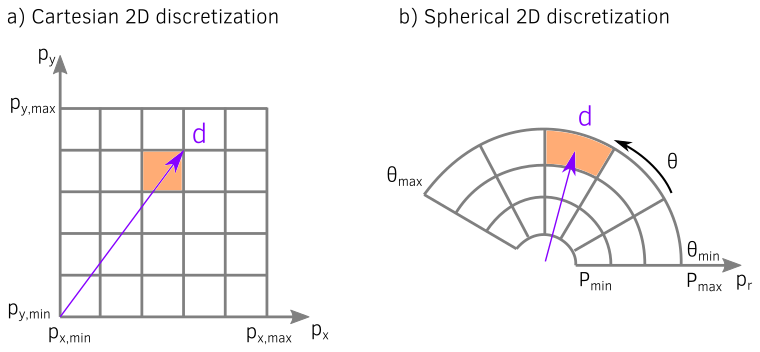

The method of M. Vranic, illustrated (in 2D) in Fig. Fig. 47, consists in 3 main steps:

Decompose macro-particles into groups according to their location so that they have nearby positions. In Smilei, macro-particles are sorted by field cells, even though, in Vranic et al., the decomposition can be larger than just one cell.

Subdivide groups into sub-groups in momentum space so that macro-particles share close kinetic properties.

Merge macro-particles located in the same sub-group into 2 new macro-particles, while conserving charge, energy and momentum.

Fig. 47 Basic description of M. Vranic merging method in 2D geometry.¶

This method has several advantages:

it is relatively easy to understand and implement,

it has a relatively low computational cost,

it is efficient without impacting significantly the physical results.

Warning

This assumes that the parameters are adequately tuned. Otherwise, the macro-particle merging can affect the final simulation results.

1. Momentum sub-groups¶

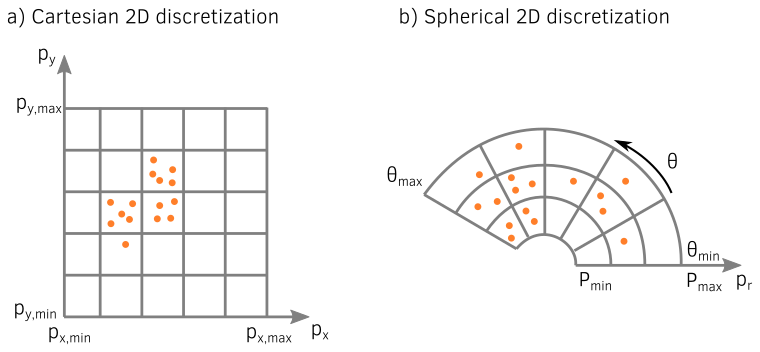

The momentum (\(\mathbf p\)) space may be decomposed either in cartesian (\(p_x\), \(p_y\), \(p_z\)) or spherical (\(p\), \(\theta\), \(\phi\)) coordinates.

In each cell, for each coordinate \(\alpha\):

we compute the overall limits in momentum space.

This space is divided in \(N_{\alpha}\) sub-groups (as prescribed by the user) of length \(\Delta_{\alpha}\).

Fig. 48 Cartesian and spherical momentum discretizations, in 2D.¶

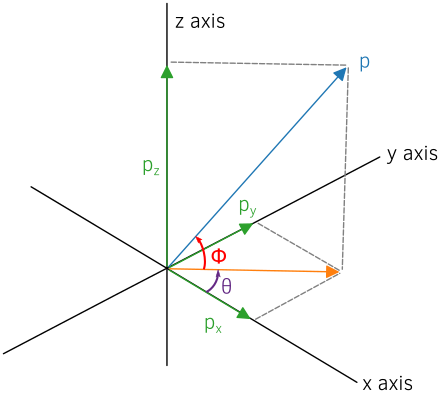

The spherical components are related to the Cartesian momentum components by:

Fig. 49 Spherical coordinates used for the momentum cell discretization.¶

Since macro-particle momentum components are defined in Cartesian geometry by default, the spherical discretization induces small additional computation. However, it makes the merging process more accurate. Indeed, in the Cartesian discretization, the maximum angle between the momentum directions of two macro-particles located in the same sub-group depends on the sub-group. For instance, in the cell adjacent to the origin \(p_x = p_y = p_z = 0\), this angle equal up to \(\pi / 2\) whatever the discretization. The spherical geometry ensures that the merging accuracy depends on the discretization and is similar for all momentum cells. The overhead induced by the change of geometry is a small fraction of the entire process.

2. Merging algorithm for massive macro-particles¶

In step 3, for each sub-group containing more than 4 macro-particles, the algorithm merges them into 2 macro-particles. Let us denote by \(k\) the macro-particles in a given sub-group \(\mathrm{M}\), their weights by \(w_k\), their energies by \(\varepsilon_k\) and their momenta by \(\mathbf{p}_k\). We start by computing total quantities:

In spherical geometry, the total angles are also defined:

Merging all the macro-particles into one cannot always conserve weight, energy and momentum. Vranic et al. propose to merge into 2 macro-particles:

The following energy-momentum relation has to be satisfied for both macro-particles a and b:

To simplify the problem, Vranic et al assume that merged macro-particles have the same weight \(w_a = w_b = w_t / 2\) and same energy \(\varepsilon_a = \varepsilon_b = \varepsilon_t / w_t\).

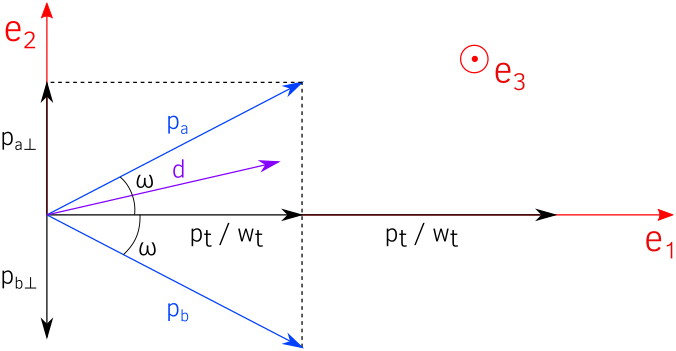

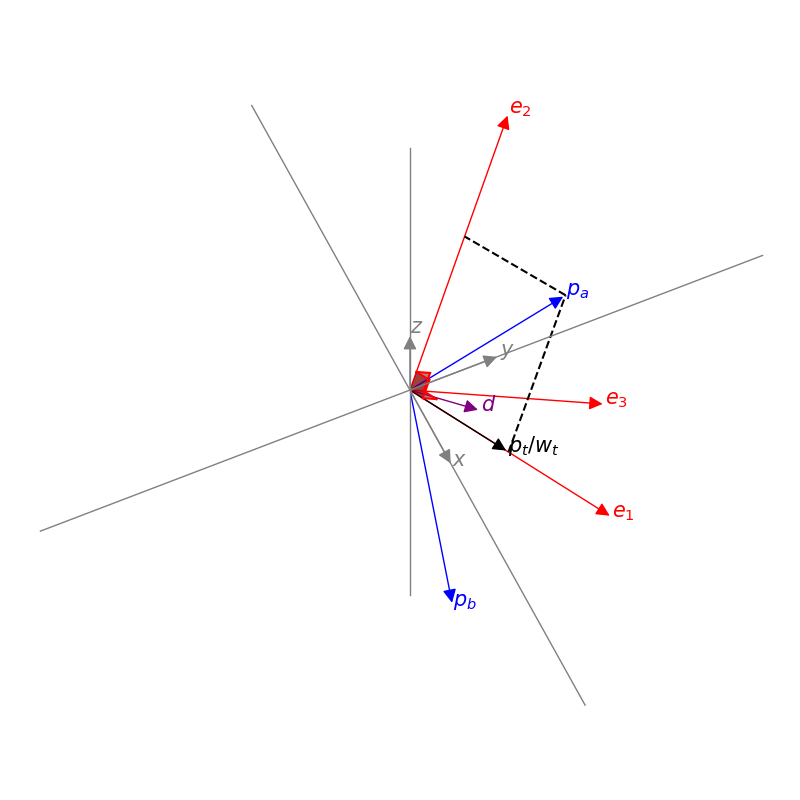

Fig. 50 View of the plane made by vector \(\mathbf{d}\) and \(\mathbf{p_t}\). The corresponding Cartesian frame is given by \((\mathbf{e_1}, \mathbf{e_2}, \mathbf{e_3})\).¶

As illustrated in Fig. 50, it follows that:

We denote by \(\omega\) the angle between \(\mathbf{p_a}\) and \(\mathbf{p_t}\) so that:

We denote by \(\mathbf{d}\) the coordinate vector of the sub-group where the macro-particles are located.

Fig. 51 Sub-group coordinate vector in Cartesian and spherical geometries.¶

The plane \((\mathbf{e_1},\mathbf{e_2})\) is the plane made by the vectors \(\mathbf{p_t}\) and \(\mathbf{d}\). We choose that it contains \(\mathbf{p_a}\) and \(\mathbf{p_b}\) so that we have only one possible solution.

The vectors \(\mathbf{e_1}\) and \(\mathbf{e_2}\) are given by the following formula in the PIC code’s momentum frame:

Finally, the new macro-particle momentums are:

Fig. 52 3D view of the different vectors involved in the merging method. Generated by this python script.¶

The new macro-particle positions are assigned the position of one of the merged macro-particles. We have tested to assign them randomly or to the first macro-particles of the merged list and we did not observe any difference.

This algorithm does not work when the total momentum \(\mathbf{p}_t\) of the macro-particles to be merged is in the direction of \(\mathbf{d}\). In this case \(|| \mathbf{e_3} || = 0\) and the system cannot be solved. In this specific case, the merging is not performed.

3. Merging algorithm for macro-photons¶

Macro-photons can be merged with the same algorithm, the only difference being that the momentum norm is equal to the energy \(\varepsilon = p\).

When the total momentum \(\mathbf{p}_t\) is in the direction of \(\mathbf{d}\), macro-photons can be merged into a single one, contrary to the massive macro-particles case, since \(\varepsilon_t = || \mathbf{p}_t ||\). This specific situation is implemented in the code.

Implementation details¶

The Vranic merging method is implemented with both Cartesian

and the spherical discretizations in the directory src/Merging.

It is considered as a particle operator and the merging algorithm is

managed with a factory (MergingFactory.h) as

any operator with multiple implementations.

The Cartesian implementation is done in the class MergingVranicCartesian

and the spherical one in MergingVranicSpherical.

For both methods, the implemented algorithm is very similar.

For each cell:

Initialization of the sub-group discretization

Computation of the direction vectors (\(\mathbf{d}\)): this step depends on the discretization and can be efficiently vectorized.

Computation of the sub-group indexes for each macro-particle. Efficiently Vectorizable.

Computation of the number of particles per sub-group. Not vectorizable because of random memory accesses.

Computation of the index of each sub-group in the sorted array of particles (only the particle indexes are sorted). Not vectorizable.

Sorting of the macro-particles per sub-groups, the index previously computed determines where each sub-group starts. Not vectorizable.

Then, for each sub-group:

Division of the macro-particles of the sub-groupinto packets (size depends on the user parameters

merge_min_packet_sizeandmerge_max_packet_size)Merge of the packs using the previously described Vranic algorithm. Partly vectorized.

Creation of the merged macro-particles at the position of the previous ones

Tag of the macro-particles to be removed

Then, once the merging finished for a given patch:

Compression of the macro-particle list (remove hole in arrays let by removed and tagged particles). By cleaning the particle vector at the end, we limit the computational impact of this step.

1. Cartesian sub-group discretization¶

How to discretize momentum space is in fact one of the most important points. The user provides \(N_x\), \(N_y\) and \(N_z\) via the namelist, but it may be slightly adjusted for algorithmic reasons:

If the momentum space is very narrow in one direction, only one sub-group may be used.

We force the origin (\(p = 0\)) to delimit two sub-groups so that a sub-group cannot contain two opposite momenta. This may require an extra sub-group to fit the whole momentum space.

2. Spherical sub-group discretization¶

The user provides \(N_p\), \(N_\theta\) and \(N_\phi\) via the namelist, but adjustments may occur:

If the momentum space is very narrow in one direction, only one sub-group may be used.

The \(\Delta_{\alpha}\) are modified by a factor 1.01 to include the maximum boundary.

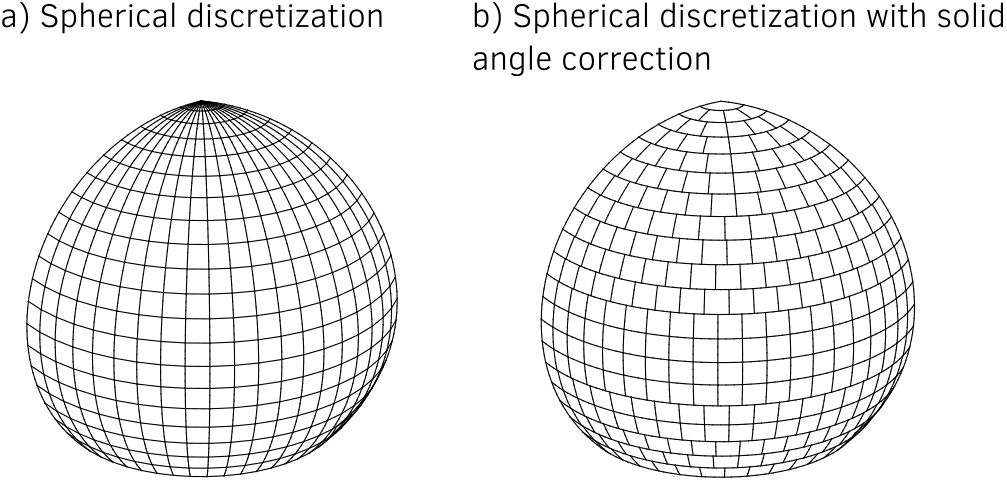

3. Solid-angle correction in 3D¶

A rudimentary spherical discretization does not ensure that all sub-groups span similar solid-angles, as they becomes arbitrarily small at the poles.

Fig. 53 Rudimentary spherical discretization (a) and the spherical discretization with solid angle correction (b). This figure was generated with the following Python script.¶

To obtain a solid angle approximately constant, the discretization in \(\theta\) is adjusted depending on the value of \(\phi\). Denoting by \(\Omega_{0}\) the solid angle at the smallest \(|\phi|\), the sub-groups length \(\Delta_\theta\) along \(\theta\) varies to satisfy \(\Omega = \sin{(\phi)}\Delta \theta \Delta \phi = \Omega_{0}\).

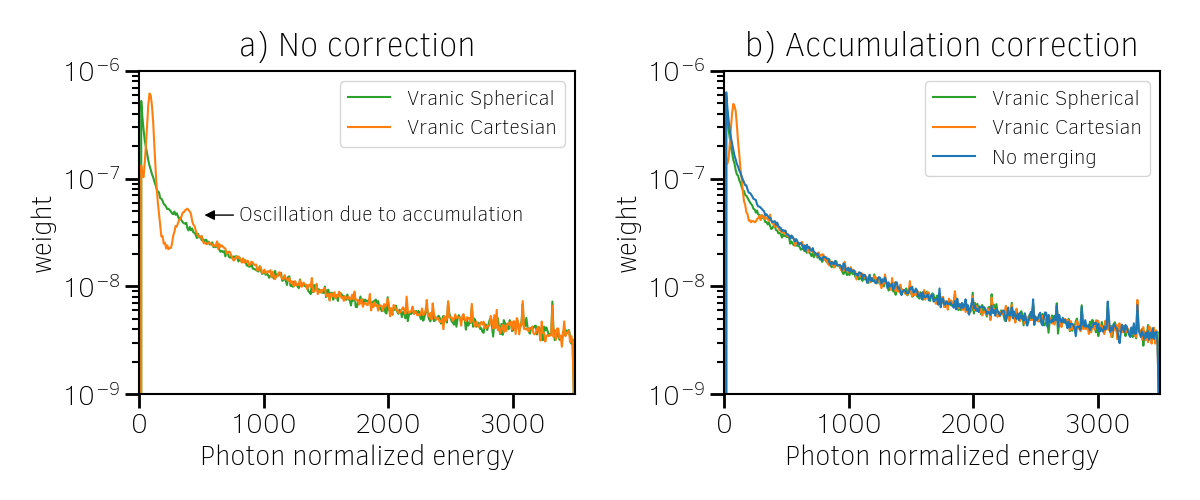

4. Accumulation effect¶

In one merging event, the strongest contribution comes from weightiest macro-particles, which mostly come from a previous merging event instead of the smaller macro-particles. Some macro-particles may thus become uncontrollably heavy and dominate others with little changes in their kinetic properties. This effect may be particularly strong with large sub-groups and broad momentum distributions, which are not well conserved.

To illustrate this phenomenon, let us consider a 3D magnetic shower benchmark: the domain is filled with an electron-positron plasma with all macro-particles initialized using the same Lorentz factor \(\gamma = 8125\) in the same direction. They propagate orthogonally to a constant and uniform magnetic field of amplitude \(B = 1000 e/(m\omega)\), corresponding to a quantum parameter of \(\chi = 20\) for both electrons and positrons. The input script of this simulation is available here.

This accumulation effect creates peaks in the photon energy distribution as shown in Fig. 54 a).

Need to explain the correction.

Fig. 54 Photon energy distribution at the end of the simulation.¶

Fig. 55 Photon px-py momentum distribution at the end of the simulation.¶

Warning: the accumulation correction is not working with the logarithmic discretization.

5. Logarithmic scale¶

For the spherical discretization only, the momentum norm \(p\) discretization may be chosen logarithmically scaled. Due to the logarithm computation, this option is slightly slower than the linear version. Nonetheless, it can handle more appropriately broad momentum distributions.

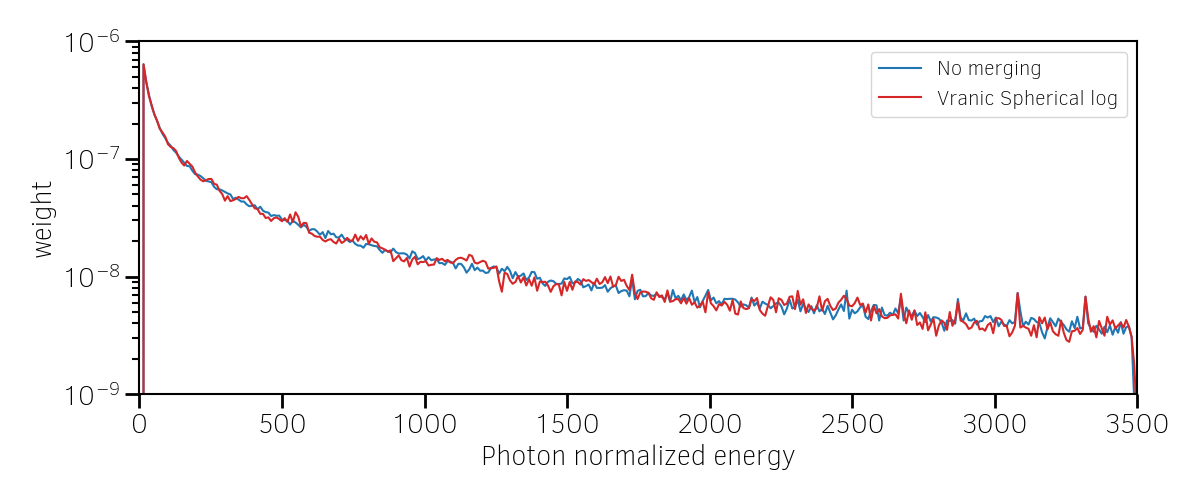

On the magnetic shower case presented in the previous section, the logarithmic discretization reproduces nicely the distribution obtained without merging.

Fig. 56 Photon energy distribution for the 3D magnetic shower benchmark at the end of the simulation.¶

Warning: the logarithmic discretization is subject to accumulation oscillations but is not compatible with the accumulation correction.

Simulation results¶

1. 3D QED cascade¶

In this section, the particle merging is tested with a 3D scenario of electron-positron pair cascading. Like the magnetic shower, a seed of electrons or positrons in a strong electromagnetic field generates high-energy photons that, in turn, decay into electron-positron pairs. The difference is that the seed particles and the newly created ones may gain some energy from the fields and participate to the generation of pairs. The production of electron-positron pairs can therefore be maintained (the cascade) as long as there is a source of energy. In such a scenario, we can expect an exponential growth of the number of particles leading to the creation of an electron-positron plasma.

A plasma of electrons and positrons (the seed) is initially irradiated by two counter-propagating lasers (strong field and source of energy), chosen as circularly-polarized plane waves.

Fig. 57 QED pair cascade configuration with two counter-propagating lasers.¶

When they collide, the two waves form a steady plane wave of very strong amplitude able to trigger strong QED effects. Detailed simulation parameters are available in this namelist.

An aggressive merging process is performed at every timestep with a relatively sparse momentum-space discretization. Note that, in 1D and 2D, it could use smaller sub-groups and be performed more often as there would be more particles per cell. The merging is applied on all species. All cases are run during a maximum of 1000 seconds.

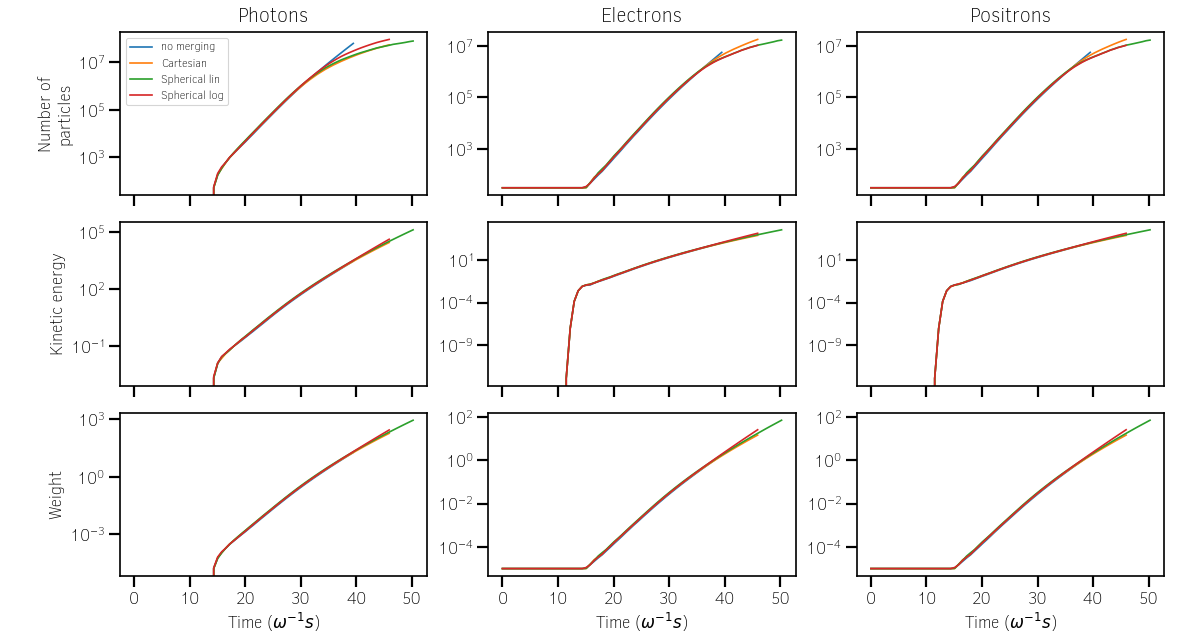

As presented in Fig. 58, the merging process starts when the number of macro-particles is high enough: \(\sim 10^5\) macro-photons. Between 10% and 20% more iterations are achieved, compared to the no merging case.

Each merging method does not exactly gives the same kinetic energy and weight evolution. As we will see, the merging processes modify the momentum distribution and influence the physical processes.

Fig. 58 Effect of various merging configurations on the number of macro-particles, their total weight, and their total kinetic energy.¶

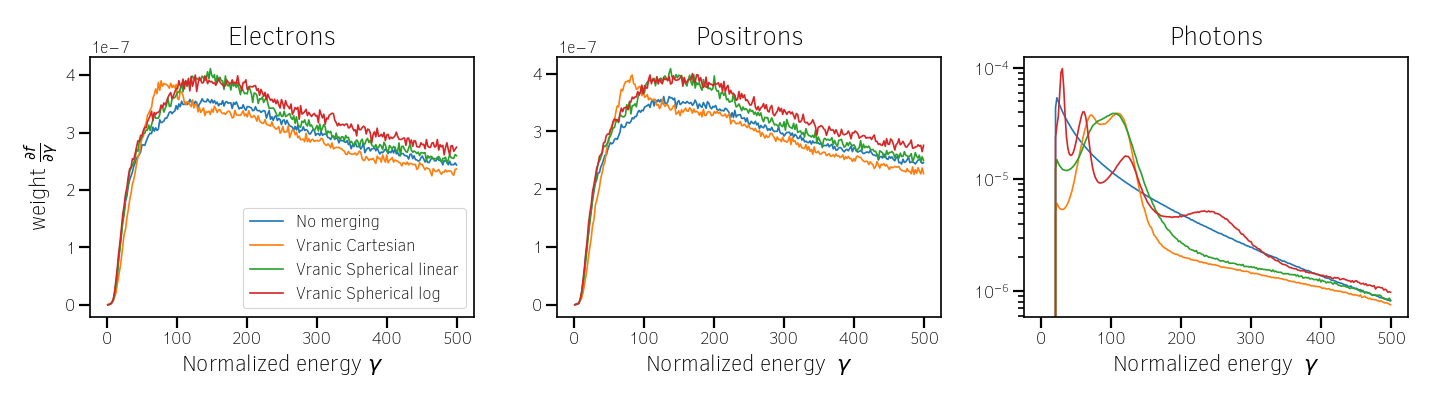

We now compare energy spectra at time \(t = 39 \omega^{-1}\) (nearly when the no merging case saturates) in Fig. 59. All merging methods significantly affect the energy distributions, and oscillations are most visible in the photon distribution due to the accumulation effect.

Fig. 59 Effect of various merging configurations on energy spectra.¶

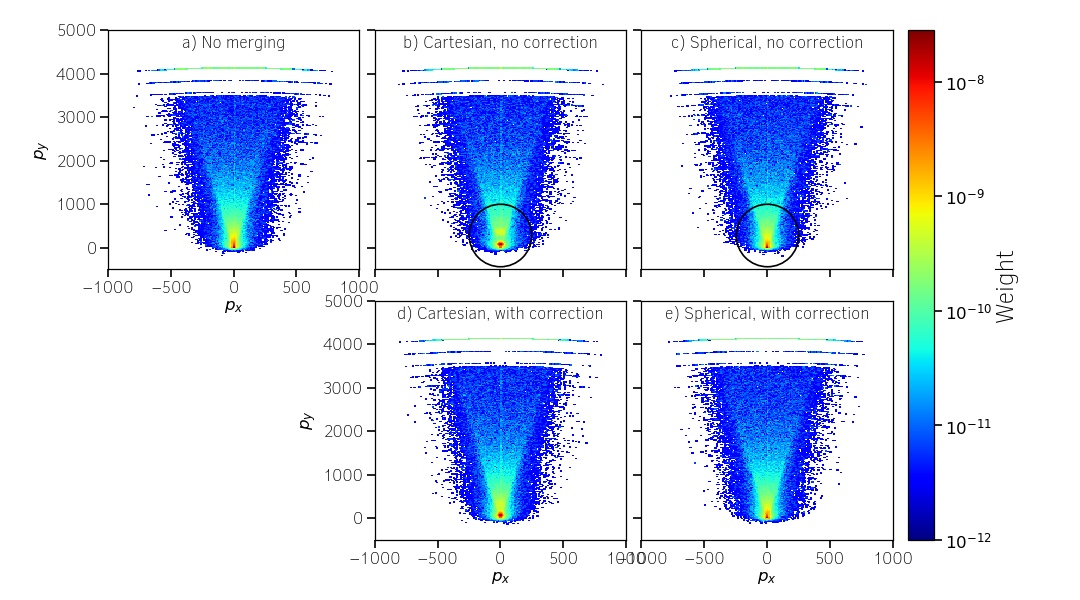

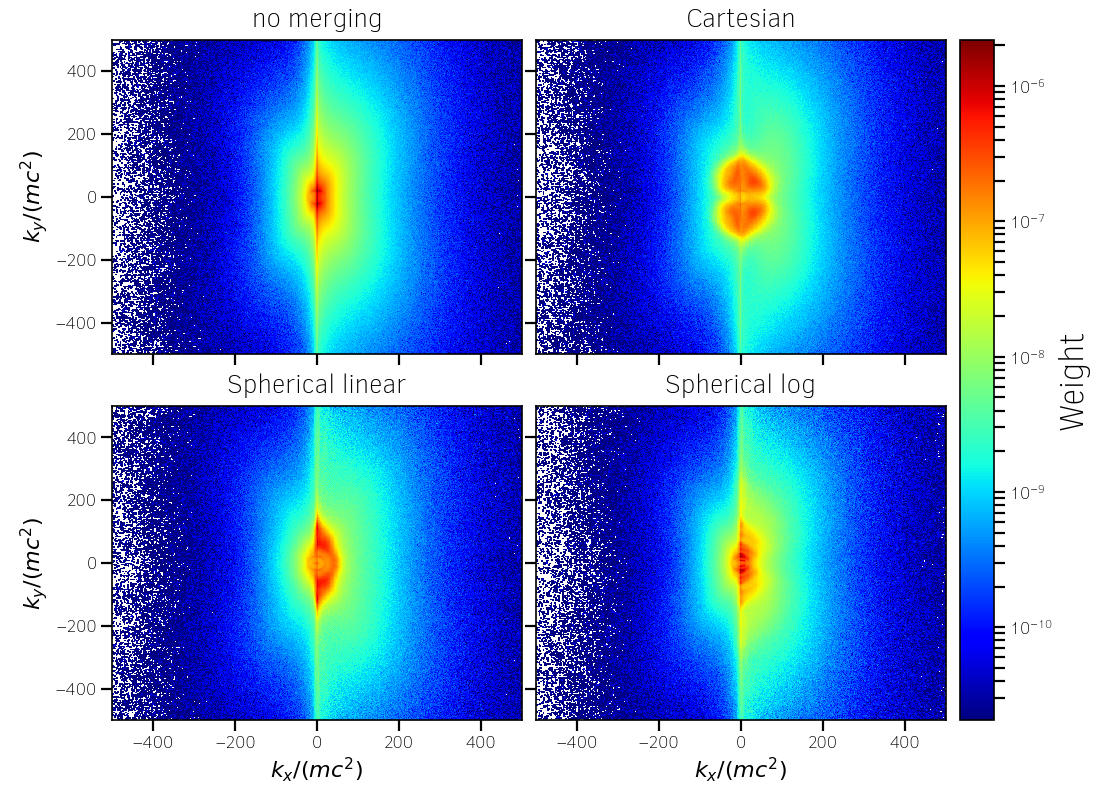

Fig. 60 shows the \(k_x-k_y\) momentum distribution of the photons. It clearly shows that, with their level of discretization, none of the merging processes can adequately reproduce the reference distribution.

Fig. 60 \(k_x-k_y\) photon momentum distributions at simulation time \(t = 39.5 \omega^{-1}\) for the various merging configurations.¶

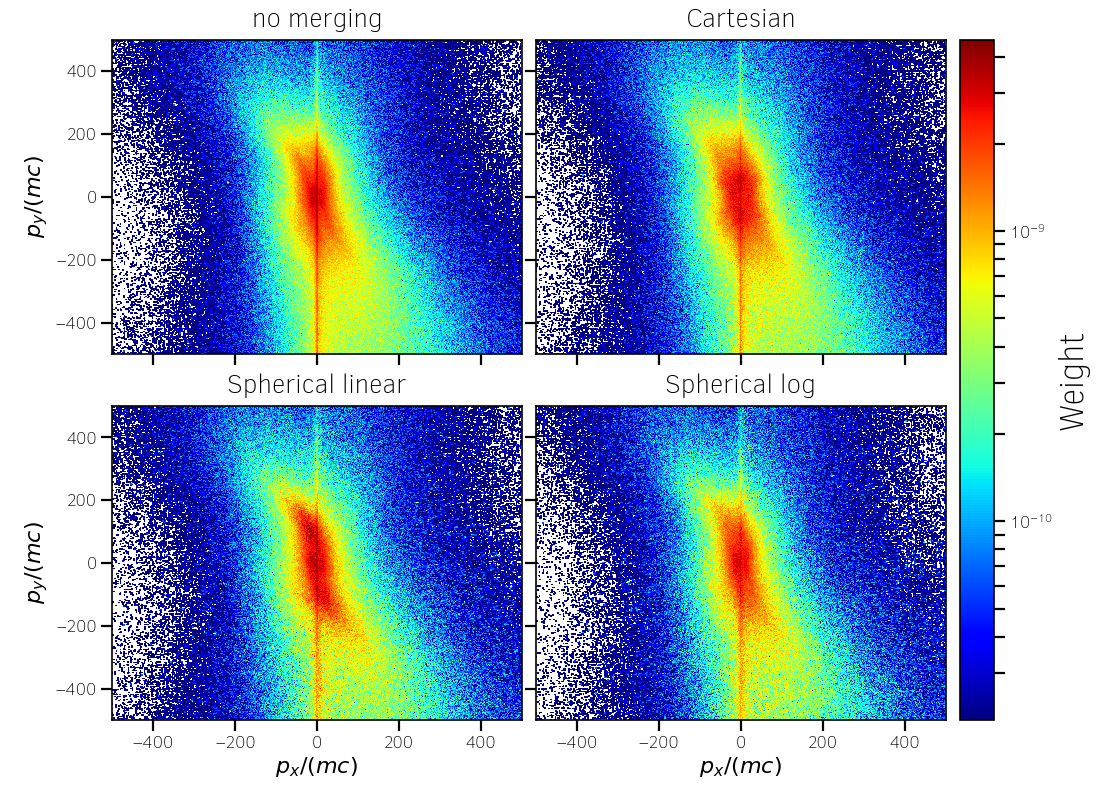

Fig. 61 shows the \(p_x-p_y\) momentum distribution of the electrons.

Fig. 61 \(p_x-p_y\) electron momentum distributions at simulation time \(t = 39.5 \omega^{-1}\) for the various merging configurations.¶

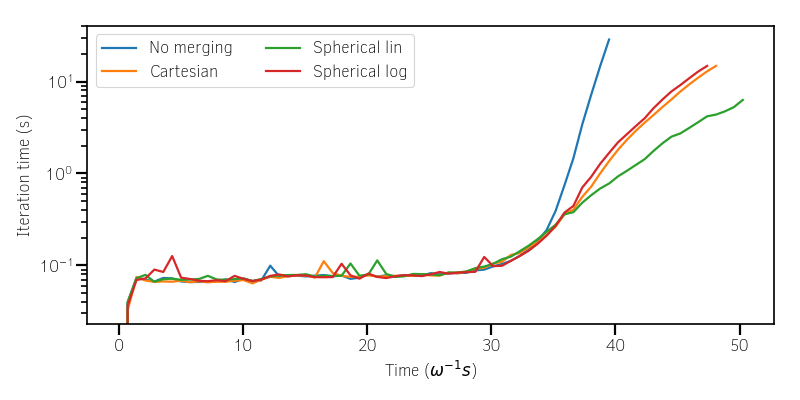

To compare the merging methods in term of performance, Fig. 62 shows the CPU time necessary to compute a numerical timestep as a function of time. The linear spherical discretization is the fastest method because the solid angle correction reduces the number of sub-groups. The logarithmic spherical discretization has the same advantage but it is slowed down by the computation of logarithms, and, in the end, similar to the original cartesian method described in [Vranic2005].

Fig. 62 Computation time per iteration as a function of time.¶

The following video illustrates the simulation benchmark in the case of a logarithmic spherical discretization. Specifically, it shows the time evolution of the electron, the positron and the photon densities in the plane x-y, integrating over z. It shows and exponential growth of photons and massive particles happening first in the y-z plane near the center of the domain then expanding longitudinally.